School Safety Deck

Rapid Imaging Technologies has an extended history of performance developing and integrating revolutionary situational awareness technologies to some of the biggest customers in the world, including the United States Military. Our software is designed to make cameras smarter and leverage augmented reality and artificial intelligence to derive actionable intelligence from live video for a variety of stakeholders. Our software has performed in high stress environments and serves as a critical component to any security platform utilizing visual analytics. With an emphasis on bolstering school safety through enhanced security protocols and staff training in Wisconsin and around the country, Rapid Imaging offers its unique video analytics solutions using augmented reality and artificial intelligence.

Problem

Ensuring school safety for students and teachers is a multi-faceted challenge. While there are a variety of solutions competing to address the challenge, no single solution, whether it be staff training, security cameras or entry protocols is 100% effective, particularly if these solutions are not constantly monitored, assessed and improved. To enhance effectiveness, it is vital to continuously monitor each component of your school safety plan, fuse the information captured from each data source and improve upon each component moving forward.

Area of Interest | Video Analytics

While there are many elements to a school safety plan, including site security, entry/exit protocols and surveillance and monitoring, Rapid Imaging is focused on deploying proven technologies to targeted data sources providing visual information.

Rapid Imaging provides integrated software solutions, employing multiple technologies to derive actionable intelligence from live video-streams. This is achieved by leveraging advanced analytics software trained to operate in the Cloud or deploy to low power devices on the Edge – eliminating the need for network connectivity.

Rapid Imaging seeks to deploy to two primary data sources, school security cameras – providing a perpetual analysis of incoming video using a convolutional neural network performing frame by frame object detection, and airborne cameras – deployed in emergency situations and providing situational awareness to First Responders, Pilots In Command, Sensor Operators, Incident Commanders and other Remote Observers.

Data Sources

Rapid Imaging has significant expertise in video processing that includes one of the first successful applications of augmented reality and industry-leading artificial intelligence recognized by both Apple and NVIDIA. The following data sources are where Rapid Imaging looks to deploy visual analytics to promote school safety.

Security Cameras

Perpetual Monitoring

Artificial Intelligence

- Perpetual Monitoring

- Live Processing

- Object Detection

- Data Fusion

Airborne Cameras

Emergency Situations

Augmented Reality

- Flight Application - RespondAR

- MISB Record

- GIS AR Overlays

- KML POI Support

Video Analytics Ecosystem

Rapid Imaging specializes in making cameras smarter by processing live video with advanced software and fusing various forms of data into a single stream. The infographic above provides insight on the video analysis ecosystem provided by Rapid Imaging to promote school safety.

Rapid Imaging utilizes two core technologies, Artificial Intelligence (“AI”) and Augmented Reality (“AR”). Each technology is targeted at a particular data source (e.g. Drone Footage, Security Camera Footage, Bodycam Footage, etc.) with AI focusing on data sources requiring persistent and continuous analysis (e.g. Security cameras or body cameras) and AR focusing on applications where location data can be displayed to provide situational awareness to users.

Presently, Rapid Imaging has solutions focused on two data sources, Drone Video and Security Camera Footage. Although other data sources exist within the video ecosystem present at many schools, Rapid Imaging has initially focused on these two data sources primarily because of our experience with drone imagery and the prevalence of security cameras at many schools across the state and across the country.

Rapid Imaging’s current architecture is deployed as two software modules; an augmented reality application called RespondAR, used for drone footage and an artificial intelligence module designed to provide perpetual monitoring of security camera footage.

As it relates to drone footage, Rapid Imaging provides an industry-leading augmented reality application utilized in conjuction with UAV airframes. This application is called RespondAR and provides geospatial situational awareness to Remote Pilots, Incident Commanders and Remote Observers analyzing the live video feed over a web browser. RespondAR is a full-spectrum application providing both baseline functionalities such as flight control and video transmission as well as advanced features including augmented reality overlays providing geographically correct location data on top of the live video feed in real-time. To learn more about the RespondAR application and its features, please see the full description below or by clicking here.

As it relates to security camera footage, Rapid Imaging provides advanced artificial intelligence software to provide continuous monitoring of security camera video feeds. Rapid Imaging utilizes trained convolutional neural networks (explained in detail below) to make identifications of relevant information (e.g. Someone entering a school or carrying an object of interest). To learn more about our artificial intelligence technologies please continue reading below.

With both software modules, Rapid Imaging provides situational awareness to numerous parties, including First Responders, Incident Commanders and staff. As footage is captured via drone or security camera, Rapid Imaging’s software digests the video frames, communicates and runs algorithms in conjunction with the Cloud via WIFI or cellular connectivity (Offline processing is an option as well but data dissemination is limited to local viewers [i.e. Viewers who have direct physical access to the video stream]) and subsequently provides pertinent information to connected individuals via live-streaming over web-browser to SmartPhones, Tablets, Computers and Command Centers.

Rapid Imaging Technologies | Experience

Rapid Imaging is a software provider specializing in Augmented Reality (“AR”) and Artificial Intelligence (“AI”). Our AR software, SmartCam3D®, was created in 1996 and was used as the primary flight display for the NASA X-38 Re-Entry Vehicle designed to bring Astronauts back from the International Space Station. Today, SmartCam3D® is fielded in all U.S. Army Shadow and Gray Eagle UAS Ground Control Stations. A 2005 study by the Air Force Research Laboratory concluded SmartCam3D® enhanced situational awarenss for Remote Pilots and improved point of interest acquisition time by 100%.

Rapid Imaging is strategically aligned with Perceptual Labs, a world-leading developer of optimized convolutional neural networks. Leading Perceptual Labs is Dr. Brad Larson, an expert in machine vision technologies. In 2010, Dr. Larson provided the first public demonstration of GPU-accelerated video processing on iOS devices. This inspired a number of popular applications, and in 2012, he published an open source framework called GPUImage that made it easy for people to do GPU-accelerated image and video processing on iOS devices and on the Mac. This framework has been used in tens of thousands of applications on the App Store, including Facebook, Twitter, Snapchat, YouTube Capture, and many others. It is currently the third-most downloaded open source framework in the history of iOS. In conjunction with Dr. Larson and Perceptual Labs, Rapid Imaging continues to build on its success in the video processing space by uniquely coupling artificial intelligence technologies with its existing software frameworks.

augmented reality

Pioneering legacy

- 1st Successful Augmented Reality Application

- Primary Flight Display on NASA X38

- Deployment on all US Army UAS

- 20+ Year Legacy & 500+ Deployed Systems

Artificial intelligence

Award-Winning Technology

- Presented to Apple and NVIDIA ML Teams

- Finalist NVIDIA Jetson Developers Challenge

- Poster Presentation GTC18

- Industry-Leading Object Detection @ 60fps

Customers

Artificial Intelligence

Perpetual Security Camera Monitoring

Site security and proper entry/exit protocols are paramount for a safe learning environment. To bolster site security and ensure proper entry and exit processes, many schools have turned to security cameras. However, these security cameras are most often useful after an event and unless they are constantly monitored, do not provide adequate protection prior to an event unfolding. This is because most camera systems are simply recording data that may not be actively monitored, reviewed or fused with complementary information. To combat this, Rapid Imaging has designed a convolutional neural network framework designed to perpetually monitor camera feeds and alert users when cameras capture information that is of interest based on “Learned” categories.

How It Works

Rapid Imaging’s artificial intelligence technologies are based on convolutional neural networks. These networks are trained on user-provided information (e.g. Imagery or Video) and develop the ability to recognize “learned” categories and generalize across varying conditions (e.g. Lighting, Viewing Angle, etc.).

Convolutional Neural Networks (“CNNs”) are computer programs that are designed to mimic human-vision. The development of a CNN focused on a specific set of categories is straight-forward when using Rapid Imaging’s optimized frameworks.

Categorized or labeled imagery (depending on the desired network-type – Image Classification vs. Object Detection [explained below]) is loaded into a training computer where a Network trains on visual characteristics of the targeted training categories and “Learns” the categories for future identification in the real world.

Image Classification

On the spectrum of Artificial Intelligence using Convolutional Neural Networks, Image Classification is “Level 1” identification, providing a general summary of an image or video frame. With Image Classification, a Neural Network can look at an image – like the fruit basket above – and give the entire image a descriptive label representing the closest category based on the Network’s learned categories. A Network trained on fruit for example, might label the fruit basket “Banana” because it is the most representative descriptor based on the Network’s learned categories (e.g. Apples, Bananas, Grapes, Pears, etc.). While this is useful for many applications, Image Classification leaves much to be desired because it can only generally describe an image by summarizing it with a single label from its list of known/learned categories.

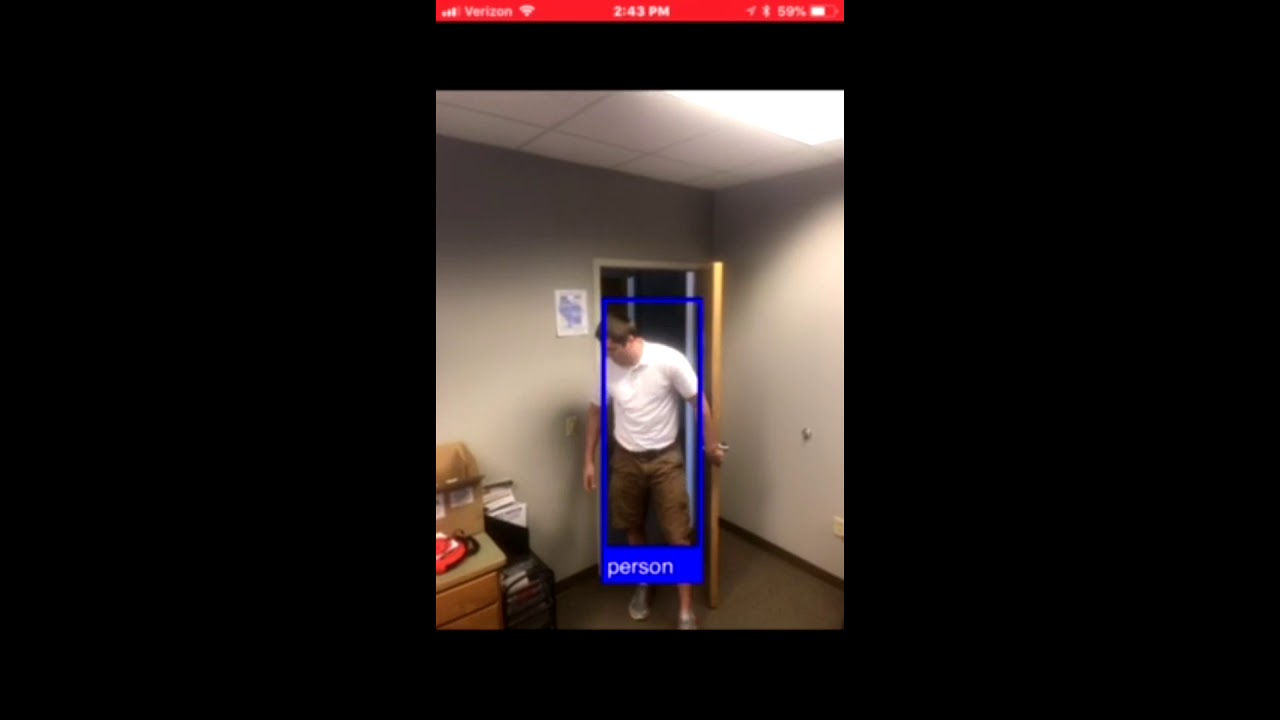

Object Detection

Object Detection is a more complex form of identification using a Convolutional Neural Network. Object Detection Networks can look at an image – like the fruit basket above – and label multiple components within the image, providing descriptive labels of a series of objects and provide more insight about the image/video/scene in question. The labeled objects are based on the Network’s learned categories. A Network trained on fruit for example, might label each type of fruit present in the image, providing additional information about what’s in the image as compared to an Image Classification Network which simply summarizes the image with a single label. Object Detection Networks are highly useful across a variety of applications, including most of our current deployments where we perform frame by frame object detection on live video.

Performance & Differentiators

Rapid Imaging’s optimized neural network frameworks perform frame by frame object detection on live video at up to 60 frames per second. To learn more about what differentiates our AI technology, see the highlights below.

On-Device

Optional

Rapid Imaging’s Convolutional Neural Network engines have the ability to run in the Cloud or on-device, making them operational in both connected and disconnected environments.

Enhanced Privacy

Optional

When utilizing Rapid Imaging’s Convolutional Neural Networks offline and on-device, users can provide enhanced data privacy because data is not sent over networks or processed on remote servers.

Compatible

Rapid Imaging’s Convolutional Neural Network frameworks are small in size and compatible with many operating systems across a variety of devices.

Rapid Advancement

Rapid Imaging’s frameworks can be trained or re-trained in as little as 12-24 hours, making them ideally-suited for aggressive development schedules.

Flexible & Optimized

Rapid Imaging’s Networks are optimized to take in any image-based dataset and train on the pre-labeled categories. Additionally, Network engines are less than 10mb in size, making application deployments simple.

Object Detection

Rapid Imaging’s AI technologies provide superior performance with live object detection at 30-45 frames per second on the NVIDIA Jetson TX1 and TX2 platforms and over 60 frames per second on iOS devices.

Current Applications

To date, Rapid Imaging and their partners have deployed trained convolutional neural networks to a variety of problem-sets, including; airborne damage assessment, distracted driving, disease diagnostics and fixed-camera surveillance. Take a look at the example videos below to see some of these technologies in action.

Airborne Intelligence

UAV Systems for School Safety

Situational awareness is paramount in any emergency situation, but especially in environments where multiple responding parties are involved and some or many of them may be unfamiliar with the environment. Rapid Imaging recently took part in a Table Top exercise simulating a school shooting at a high school. The First Responders involved included City Police, the County Sheriffs Office, the City Fire Department, the City Emergency Medical Response Team, and First Responders from neighboring towns and counties. If this were a real situation, many of these First Responders, particularly those from neighboring areas, would be walking into a completely unknown environment, having never been to the school. To combat this, Rapid Imaging has developed RespondAR, a tool that leverages the same situational awareness technologies utilized by the U.S. Military to keep soldiers safe. This tool is highly effective in providing aerial intelligence that can be used in both simulated (e.g. Table Top Exercises) and real emergency situations.

Smarter Cameras

RespondAR makes your UAV Camera smarter by blending GIS data from your aircraft with live video frames to produce MISB 0601 STD -georeferenced video.

Leveraged Assets

RespondAR allows First Responders to leverage their team by providing geographic context to live video and visual overlays that enhance situational awareness.

Situational Awareness

RespondAR provides enhanced situational awareness to Pilots in Command, Sensor Operators, Incident Commanders and Remote Video Observers.

Mission Assured

RespondAR is powered by proven technology, SmartCam3D®, an augmented reality software that is deployed in all US Army UAV Systems including the Shadow and Gray Eagle Platforms.

Situational Awareness for First Responders

RespondAR is a UAV Application designed to provide geographic augmented reality overlays on live video. This provides enhanced situational awareness to Pilots In Command, Visual Observers, Sensor Operators, Incident Commanders and other remote video observers. RespondAR can be used by a variety of Emergency Response personnel, including: Fire Departments, Police Departments, State National Guards, Emergency Response Teams, State Emergency Management Offices, Federal Emergency Management Offices and the like. Geographic overlays include Points of Interest from a global GIS database, including: Road and Street Names, Historical Markers and other points of interest as well as imported points of interest provided by the user within the application or through imported KML Files.

Choose the perfect plan

RespondAR provides situational awareness by giving a geographic context to live video. Take a look at the Free and Paid Plans below to utilize RespondAR with your Emergency Response Team.

Live HD View

RespondAR provides a real-time High Definition downlink allowing the Pilot In Command to see exactly what the airborne camera sees. Full manual camera controls are included within the application allowing complete control of all camera settings including: shutter speed, aperture, ISO and much more.

In addition to the live HD view, users can record their footage to the SD card using RespondAR. This footage is saved as a .ts file if MISB recording is engaged.

In addition to the live HD view, users can stream their MISB video to the cloud. This allows remote observers to see both the live video feed as well as the augmented reality overlays, providing enhanced situational awareness.

Auto Take-Off and Auto-Landing

Auto take-off and land your aircraft with just a swipe of the finger on your smart device. Track your aircraft’s position and heading with a glance at a map. You can also use this map to set a new home point and even activate Return to Home, making flying easy and simple.

Real-Time AR Overlays

RespondAR provides real-time augmented reality overlays of geographic information from a global GIS database. These overlays are superimposed on the live video stream providing operators with enhanced situational awareness. Baseline GIS overlays include road and street names and traditional Points of Interest provided within tools like Google Earth. However, within the Enterprise Tier, Rapid Imaging provides enhanced levels of detail as well as loadable KML POIs and custom GIS data integration.

Baseline GIS AR Overlays are served from a global database provided by our partner, Garmin.

Users subscribing to RespondAR’s Premium Tier enjoy KML POI support, allowing them to load their own Points of Interest into a KML File and subsequently loading it into the RespondAR application where the POIs are displayed over the live video feed.

Users subscribing to RespondAR’s Enterprise Tier enjoy enhanced levels of detail, which provide even more Points of Interest than that offered by the Baseline functionality. Additionally, Enterprise users may inquire about custom integration for GIS maps or other GIS information not contained within the Baseline functionality or addressed by loadable KML POIs.

misb streaming

A powerful feature provided to Enterprise Users of RespondAR is a MISB streaming capability. This capability provides users with the ability to broadcast their live video feed with included GIS augmented reality overlays.

Frequently asked questions

RespondAR is a mobile application designed for use with small Unmanned Aircraft Systems. The app blends aircraft telemetry data with live video frames to produce an augmented reality (“AR”) scene in which AR overlays are displayed on the live video feed. These overlays represent various positional data-points including Road/Street Names, Points of Interest, Landmarks and the like.

RespondAR is currently compatible with all modern DJI platforms including the Inspire lineup, Phantom lineup (Phantom 3 and Newer), Mavic lineup and Spark.

RespondAR is currently supported on iOS and will soon be supported on Android (July 2018).

RespondAR uses industry leading Augmented Reality (“AR”) software and is powered by Rapid Imaging’s proprietary SmartCam3D® product. SmartCam3D® provides situational awareness to soldiers and is currently deployed in all US Army unmanned systems, embedded within the Universal Ground Control Stations used to fly the Shadow and Gray Eagle platforms. A 2005 study by the Air Force Research Laboratory found that SmartCam3D® enhanced situational awareness of pilots and improved target acquisition time by 100%.

MISB 0601 STD is a video format required by the Department of Defense for all airborne imagery. This standard blends UAV telemetry data with video data-packets to produce a combined stream of georeferenced video. The technology powering RespondAR, SmartCam3D®, was originally designed for NASA and is embedded within all US Army Unmanned Systems. SmartCam3D® utilizes MISB video from the Shadow and Gray Eagle platforms. When implemented into RespondAR, SmartCam3D® creates the MISB stream by combining the geospatial metadata from the aircraft with the video frames coming from the camera. This is important because it provides a geographic context to the live video stream and provides enhanced situational awareness to Pilots In Command, Sensor Operators, Incident Commanders and remote video observers.

Rapid Imaging is currently available on the FirstNet (AT&T) Store as well as the App Store. In July 2018 we hope to have the application on the Playstore as well.

The difference between MISB Record and MISB Stream is that MISB recording allows a user to record their video feed with the augmented reality features within the video. MISB streaming on the other hand allows the user to stream the live feed (with augmented reality overlays) to one of multiple live-streaming services (e.g. AT&T FirstNet, Facebook, YouTube).

Geographic Information System Augmented Reality Overlays or GIS AR Overlays are map icons and linework that are superimposed on a live video feed. Unlike traditional augmented reality that you’d see in most AR apps on the App Store or Playstore, Rapid Imaging’s AR Overlays have a geographic context and can be associated with latitudinal and longitudinal geocoordinates in the real-world. This is because we utilize MISB georeferenced video, meaning we can translate geocoordinates to optical screen coordinates. This allows RepsondAR to lock an AR Tag to a specific location in a video feed (i.e. Optical Screen Coordinate) based on that Point of Interest’s real-world geocoordinate location.

KML POI support allows users to upload their own KML files with designated Points of Interest. This is useful to users who would like to populate their video view with Points of Interest that they feel are important but that may not be included within the Global GIS database we utilize within RespondAR. As an example, maybe an open area a safe distance away from an incident scene is designated as the Reunification Point. This is an example of a Point of Interest that would be relevant to include within the video but that may not be present in the Global GIS database which focuses on specific POIs like road names, hospitals, golf courses and other common POIs you’d be use to seeing in Google Earth. This is a powerful feature for a First Response Team because it enhances situational awareness.

“LOD” stands for Level of Detail and relates to the amount of GIS information provided within a specific view. There are multiple levels of detail available when calling a GIS server. Lower levels of details may show major highway names while higher levels of detail will include major highways but also all road and street names and Point of Interest icons representing various POIs including hospitals, parks, golf courses, etc. within the same video view. Depending on the application, different levels of details may be required by a user with some preferring all the information available while others like a less cluttered view.

Learn More

Rapid Imaging has spent the last two decades providing situational awareness tools in high-stress environments around the world to keep soldiers safe. Today, Rapid Imaging looks to provide schools, Emergency Responders and State and Local Governments with these same technologies to keep students and staff safe. If you’d like to learn more or have any questions, please contact us using one of the methods below.